A multiyear, multi-stakeholder project by Ubisoft’s La Forge team is making new strides in the procedural automation of characters in real time.

The liveliness of a AAA, open-world game is in large part predicated on how well it pulls you into its universe, how it makes you feel like you’re there – which means that the world’s people can make or break the experience. So these kinds of games are often composed of thousands of characters.

Assassin’s Creed Valhalla alone, for instance, has upwards of 75 named characters, not to mention the thousands of non-playable characters (NPCs) who inject life into the farms, villages, and cities you encounter during your quests by milling about their daily business.

“The more we progress as video game makers, the more characters we need in our video games,” says André Beauchamp, R&D developer. “And making a single head takes a long time.” André is part of Ubisoft La Forge, an incubator that facilitates using academic research and advancements, for instance in machine learning (ML) or complex math, in our tech and games.

Creating hundreds of characters easily adds up to tens of thousands of hours of work, much of which is composed of repetitive, rather than creative, tasks. This is especially true in the creation of NPCs, who are crucial to the game’s atmosphere, but are often just glimpsed for seconds at a time and so don’t need to be as keenly designed as more significant characters. Because of that, games typically come preloaded with a set number of NPCs that appear more or less randomly across various maps.

But when you’re working with a limited set of characters, even a large one, you still run the risk of having your player turn a corner and run into literally the same character they left behind a second ago, which is not the kind of surprise you’re trying to create in-game.

And so with the need for a greater number as well as a broader variety of characters growing steadily, the teams at Ubisoft La Forge and Ubisoft Helix (a team that specializes in the production of visuals and cinematics) identified the repetitive parts of character creation as a challenge that could greatly benefit from automation.

La Forge experts, working alongside dozens of colleagues across Ubisoft productions and studios as well as with doctoral candidates from several universities, have been developing a tool, Faceshifter, that procedurally generates character heads in such a way that they’re diverse but realistic; they fit the context of the scene they are in; and, importantly, their generation doesn’t overtax the resources of the game engine, regardless of the console or computer running the game.

With a system like Faceshifter, we can generate heads in a few seconds, which therefore allows us to generate, directly in the video game, lively and diverse crowds of various sizes, made up of a bunch of different faces,” says André.

The extensive research project comprises a collection of smaller projects and, to date, has involved dozens of stakeholders, developers, designers and coders, as well as frequent input from production teams, over a few years.

Creating credible new characters: starting with real heads, and then breaking them down

Faceshifter started with what Ubisoft already had: the high-resolution scans of a thousand or so heads produced over the years for our various games, courtesy of Ubisoft Montréal’s Alice motion-capture studio.

However, going from a high-res, 3D scan to an animated character in a video game is an exceptionally data-heavy and time-consuming process; a single head is composed of tens of thousands of data points, and precisely because they are high res, scans are often messy.

La Forge had to figure out how to clean these scans and wrap them—that is to say, turn the images into 3D polygons—to build their starting database.

Using different algorithms and ML techniques, they used anatomical markers on each scan to morph a generic base head’s marker references into the shape of the real head.

While they managed to attain a high degree of precision, the small but significant details of the eyes and ears presented their own challenges. La Forge first had to develop an ML system to detect the approximate positions and shapes of eyes and ears, and then combine it with a complex math system that analyzed curves to determine exact intersections—down to the millimetre.

Generating never-before-seen faces that represent humanity in all its diversity

While all these heads have become usable and searchable assets in the Faceshifter database, what’s exciting is the possibility to use the components of these heads as assets in themselves.

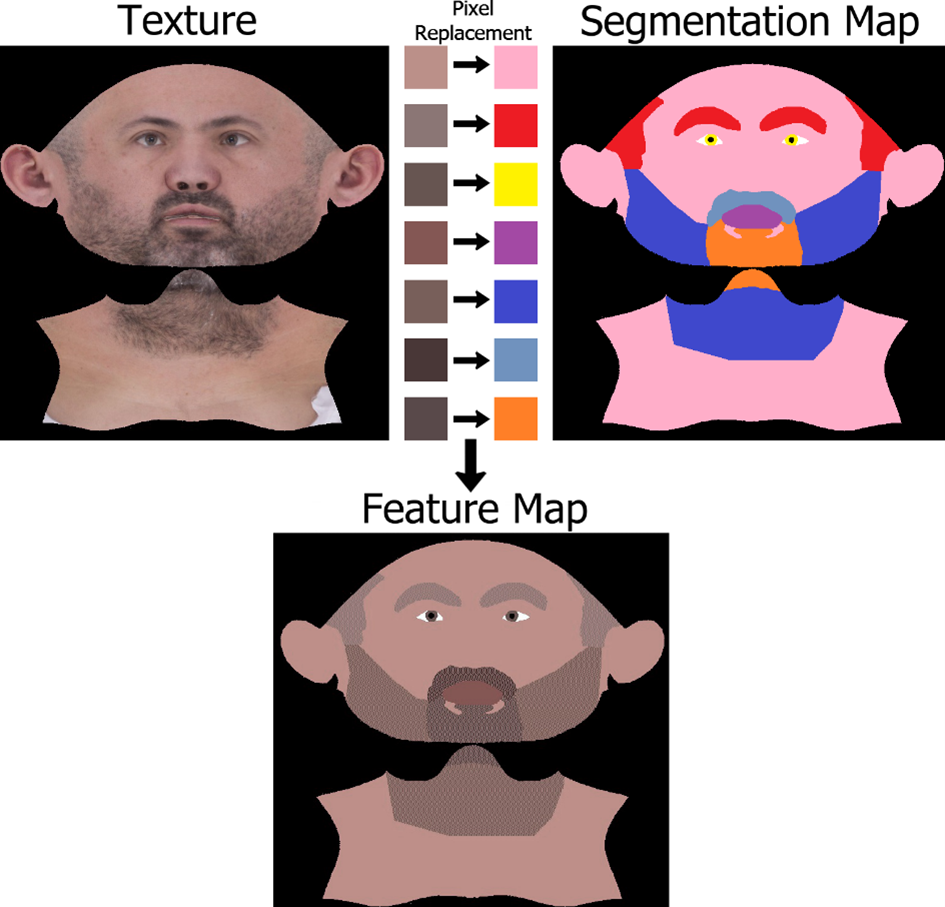

The team broke the scans into component parts of the human head. On the one hand, there’s a collection of segments representing base facial features such as the eyes, mouth, chin, etc. Then there’s the overlaying facial texture: skin colour, consistency, hairiness, etc.

These components can then be mixed, matched, and morphed to create new faces—many, many never-before-seen faces. But always human faces.

“The point of Faceshifter is to create valid humans,” André says. “It shouldn’t be possible to end up with a nose that’s much too big, for instance, because the system will adapt it to fit in that specific face.”

What’s more, the features blend seamlessly—as in, we the seams between the differently sourced nose, chin, mouth, etc., smoothed out—thanks to complex math that was the work of a doctoral student working with La Forge, Donya Ghafourzadeh. Donya also worked out the complex, 3D geographical math to determine the appropriate ratios between features and ensure that features are scaled appropriately.

At the same time, the La Forge team has been using ML to study the database of heads and draw conclusions about facial attributes. This has led to using principal component analysis (PCA) modifications to guide the system in generating random, realistic heads based on certain attributes, for instance age, size, build (amount of fat on the face), gender, ethnicity and more.

The principle is similar to certain sliders found in RPG character customization features, and is used to render characters that fit their context. Faceshifter should be very versatile in the diversity of heads it can render while working producing characters that fit the locations and eras presented in any given game.

So, for instance, a game designer could indicate that automatically generated characters in a specific location should share certain characteristics such as ethnicity or age group; or that, conversely, the characters should be highly diverse.

Busting a move without busting the compute resources budget

Given enough time and computing resources, Faceshifter can generate realistic, high-resolution human faces that are of high enough quality that they can be used in large-scale static images and even high-def cinematics. It can do this because the heads are generated in meshes made up of thousands of vertices, or control points, that can be used to not only animate the face, but deform it to create new characters, as described above.

But the time and compute power needed to do this are simply not available in a video game—even the most modern consoles would not be able to render in real time a crowd of diverse faces that, to boot, must be in three dimensions and animated.

“Even if some modern consoles might be able to handle this, it’s not necessarily where we want to put our compute budget,” says Marc-André Bleau, R&D developer at La Forge.

For the last 10 years, Ubisoft has been using a tool called Facebuilder, which works with a few hundreds “bones” to animate a face, allowing the character to open their mouth, blink, and more. La Forge had to find a way to scale down and port Faceshifter learnings into Facebuilder, while losing as little control and versatility as possible—and incidentally evolved an innovative additional way of generating NPC faces.

“The idea was that instead of generating a mesh, we would add points of control to Facebuilder so it could go beyond animating a face to deforming, customizing, transforming it,” Marc-André says. “So we moved to no longer using a mesh, but rather using the Facebuilder interface, with its more abstract, higher-level controls, that hide a lot more logic underneath, but are more efficient. There’s a lot less control, but a lot more speed.”

A future of infinite characters… and challenging projects

The Faceshifter project has generated, or coincided, with many other Ubisoft ventures. One such project is Bodyshifter which, you might have guessed, aims to do with bodies what Faceshifter does with faces. La Forge is in the early stages of this project that should procedurally generate a physique to complement the automatic head, giving production access to a near-infinite number of fully embodied secondary characters.

Another mission that La Forge is exploring is the animation of body language and emotional states. “Right now, an NPC’s forehead is almost always very smooth,” Marc-André says. “We need to have their body language inform the player about how they feel.” To do so, La Forge researchers are identifying the typical facial and body motions associated with categories of emotions and reactions. They will then find ways of having the character move, or behave, the “correct” way automatically according to context.

Similarly, an audio project has been evolving in parallel to generate movement in a character from sound—think of it as NPC karaoke. We’ll be sharing more about this deep-learning sound-matching project soon; you can learn more here and here.

A character is more than just a face, and as the La Forge team discovers the possibilities of Faceshifter, the list of next steps keeps growing, filled with fascinating mathematical, programming and artistic challenges.