Every year Ubisoft records hundreds of thousands of dialog lines that bring to life the worlds in its games. Processing these lines amounts to a considerable load in manual work hours and delays the delivery of the dialog lines to game development teams. The goal of VoRACE is to automate tedious tasks performed on recordings to accelerate the overall workflow, while maintaining the same level of quality.

This blog focuses on the trimming of raw audio recordings. The trimming task consists of cropping superfluous parts of the recording such as silences and breathing noises to keep only the intended dialogue line. Figure 1 shows examples of recordings before and after trimming. While removing silent portions of a signal is straightforward, challenges arise when other unwanted sounds are recorded before and after the dialog line. These unwanted sounds can be studio chatter, breaths, coughing or microphone manipulations. These sounds render off-the-shelf solutions inapplicable because they often operate on loudness thresholds. It means that they will keep any sound that contain enough energy without regard to its nature or context.

This motivated us to create a machine learning system that will recognize dialog by analyzing the audio content instead of relying only on energy measurement. Our approach is based on convolutional neural networks. The network is trained to reproduce the manual labor performed on hours of studio recordings

Figure 1 : Concept of trimming

Context

As mentioned earlier, off-the-shelf silence removal plugins detect salient parts of the signal by comparing its energy to one or a series of parametrized thresholds. These thresholds need tuning for each recording session which is time-consuming and impractical. They are prone to error because they do not recognize the type of sounds that triggers them. For example, loud artifacts like tongue clicks or heavy breathing will be kept while soft pronunciations of letters at the end of sentences can avoid detection.

Voice Activity Detection (VAD) has the same problem. VAD components are often used in telecommunication systems to avoid transmitting silent frames to reduce bandwidth and energy costs. They are designed to function in noisy streams of audio and trigger whenever voice is present. However, this design means that common occurrences in studio recordings, like side commentary by voice actors or background chatter by the recording crew, will erroneously be detected as dialogue.

The recordings below exemplify fail cases for these approaches.

background chatter

self chatter

commentary

background chatter

heavy breathing

Methods

Ideally our system would understand the nature of the sounds in the recording. The system should never remove dialog content while minimizing unnecessary tail silences. In addition, it should distinguish between relevant dialogue and chatter and recognize unwanted noises. Finally, the systems should be able to assert confidence in its decision to direct the attention of a sound engineer on more difficult samples. This ensures that we maintain the same level of quality as a fully manual process.

We formulate the trimming problem as a dialogue activity detection task. This enables us to leverage the recent advances in Voice Activity Detection. This is a classification task in which our system predicts whether a small frame in the recording corresponds to dialogue or not.

Our system views each audio frame as a combination of mel-spectrum and other acoustic features. Mel-spectrums are spectrums rescaled in a non-linear way that mimics the way humans perceive sound. These mel-spectrums are concatenated in time to form mel-spectrograms. Other features include acoustic descriptors of the signal, such as the energy, zero-crossing rate, and relative cumulative energy of the frame, and share the same temporal resolution as the mel-spectrograms.

We measure the energy over the full spectrum and over specific regions of the spectrum, specifically a lower and higher band. Two different window sizes are used when measuring the energy, yielding a total of six energy feature vectors. We also compute the zero-crossing rate, which corresponds to the rate at

which the signal changes polarity. Finally, we sum the cumulative energy seen at each timestep and normalize it between 0 and 1. This gives a sense of position to the model.

Table 1 summarizes the input features:

Table 1: Acoustic features used for model input

We group several predictive models to form an ensemble. Ensembles have been shown to increase prediction accuracy and stability. Ensembling has also proven to be a reliable method for uncertainty estimation [1]. All predictive models share the same architecture, but each has a unique set of weight. To enforce diversity, each model has been trained on a slightly different data set, a method commonly known as bagging [2].

When processing an input, each model returns a score indicating the probability of a frame being dialog. These scores are averaged to obtain the final prediction.

Our model architecture is based on convolutional neural networks. Convolutional layers process several mel-spectrum frames with a fixed-size receptive field. The resulting features maps are flattened and combined with the acoustic features into a single vector. A fully connected layer followed by a softmax activation function produces the final class scores from that feature vector.

The system is trained on game dialogue audio files. We need ground truth annotation for every frame of every raw recording. Typically, annotating data for supervised learning is a time-consuming process. Fortunately, we have access to over 20 years’ worth of Ubisoft game dialogue. Comparing the raw recordings with their manually trimmed counterparts yields the trim locations. From these locations, we identify the class of every frame in a signal. We train our models on mini batches of audio frames sampled from various recordings in the training dataset.

When processing a whole dialog line, predictions on audio frames are concatenated into a detection curve. Patterns uncharacteristic of human speech can appear in the curve, such as quick impulse-like detections in silences or short silences in dialogue areas. Out-of-distribution data or unexpected model behavior may cause these spurious events. We filter them out when they last less than a few hundred milliseconds. The first and last frame marked as dialogue correspond to the cut locations.The system can fail when faced with difficult recordings. For example, a line may contain ambiguous dialogue such as intended breathing at the beginning, deliberate pauses in the middle of the sentence or drawn-out quiet syllables at the end. Our system identifies these difficult examples for inspection by a sound engineer.

This help ensure that we provide the same level of quality as fully manual work. The idea is illustrated in Figure 2. The system returns a confidence score for each cut locations and identifies strange detection patterns using heuristics based on domain knowledge.

Figure 2: Rejection mechanism

We measure cut confidence by averaging decision confidence on frames surrounding the cut location (see Figure 3). To compute this average, we select the confidence associated with the expected class before and after the cut. For instance, at the beginning, we expect silence before the cut and dialogue after. We compute the cut confidence over three different time windows and retain the lowest as final confidence

Figure 3: Cut confidence

The confidence mechanism compares the confidence of the prediction with a threshold to keep only high certainty trims. Since both the begin and end cut of a prediction have an associated cut confidence, the comparison uses the average across the two cuts. This mechanism also draws the attention of the sound engineer to what types of dialogue result in low confidence predictions.

Domain knowledge-based rejection mechanisms rely on traditional signal and dialogue processing. These heuristics include for example ignoring detected dialogue areas with low energy (expecting these to be background chatter detections), rejecting empty predictions or rejecting predictions with multiple detection zones (difficult samples with narrative pauses in the middle).

Assessing the trim accuracy of the system involves checking if the estimated cut locations match the true cut locations on a recording. It is an awkward task as there is no unique way of trimming: two raters will likely trim a sample differently, with both results being acceptable. Because our training dataset contains samples from a large variety of raters, the model learns to predict a good trim on average, not the trimming routine of a single rater. Expecting the system to match exactly a ground truth (from a single rater) would be unreasonable and unproductive.

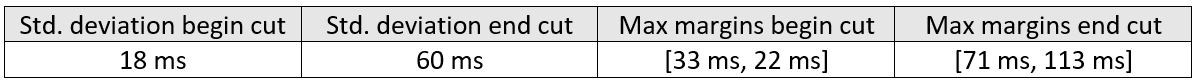

We consider predictions acceptable if they land close to the true cut location. The challenge is to measure this tolerance range. The range is established from two tests done with our partners in production.

The first test estimates heterogeneity in manual trims. We measure the standard cut location deviation and average maximum margins across 25 identical samples trimmed by ten raters. This deviation tells us how varied manual cut locations can be depending on the position of the cut, while max margin informs us on possible spans seen among trims. Results can be seen in Table 2 below.

Table 2 : Tolerance window manual trim test

Despite the first test pointing to a smaller margin, we believe larger deviations from a true cut are still valid and will not be noticed by ear. The second test gauges how close to the dialogue it is possible to trim without impacting sound quality. Around a hundred difficult files are systematically trimmed closer to or farther from the dialogue with pre-specified increments. These modified files are sent to professionals and amateurs alike and rated on the acceptability of their trim. Results are shown in Graph 1 below. Iterations denote two different versions of the test, with tighter and larger trim increments. The graph only shows scores belonging to increments bringing the cut closer to the dialogue.

Graph 1: Tolerance window listening test

Graphique 1: test de gamme de tolérance, par test auditif

These two tests provide sufficient empirical evidence to characterize the tolerance range. The first test informs us this tolerance window is asymmetrical, with a tighter window around the begin cut and a looser window around the end cut, while the second test informs us on what proximity to the dialogue can be allowed for predictions. Since cuts farther from the dialogue were less noticeable, a greater span can be allowed in those regions. The final tolerance window ([close to dialogue, far from dialogue]) has a [30 ms, 100 ms] and [60 ms, 200 ms] span around the begin and end cut, respectively.

Results and Discussion

Table 3 describes the accuracy on non-rejected data and the rejection rate of our system when tested on game data from various projects. The accuracy represents the model’s ability to correctly predict cut locations within the tolerance range around ground truth cuts.

The accuracy and rejection rate vary across datasets, with an average of 75.4% and a rejection rate of 36.7%. The variability stems from the nature of dialogue lines in the games. Some types of lines are more difficult to trim and their distribution differs across games. We consider the system performance in preliminary experiments to be sufficiently good to deploy a prototype in production for evaluation in a real-world scenario.

Table 3: Accuracy of predictions on research datasets

Table 4 describes the performance of our system when used in a post-processing session consisting of 80 000 recordings. Accuracy (correct/incorrect) and rejection status (accepted/rejected) categorize the predictions. The raters determine the accuracy of predictions: any automatic trim needing modifications are considered incorrect. The rejection flags returned by the system determine the rejection status.

On one hand, while the system rejected 1/4 of its predictions, closer examination by the rater reveals most of these rejections are correct (98.1%). On the other hand, almost none of the accepted predictions are incorrect. This result highlights the system’s robustness to false positives, which is crucial in this case as the user needs to trust the automatic predictions.

Table 4: Accuracy of predictions in practice

Results from the same post-processing session, shown in Table 5, demonstrate the efficiency our system brings to the workflow. We observe a ten-fold acceleration in dialogue processing when using the auto-trimmer. Table 5 shows the recorded processing times. Technically, the system reduces the total amount of time required to trim only by a factor of 2 (220hours vs. 560 hours), but most of the work done by the auto-trimmer happens in background, time during which the user can complete other tasks. Therefore, only a fraction of the auto-trimmer’s processing time (60 hours) requires manual work.

Table 5: Execution time of manual trimming vs. automatic trimming during production

Conclusion

As the size and richness of video games increases, the task to populate their virtual worlds with dialogues and interactions becomes daunting. This task requires considerable time and effort, which includes the recording and post-processing of dialogues in studio. In fact, for every hour of recorded dialogue, two hours of manual post-processing are required. These unavoidable delays slow down delivery of dialogue assets to production teams, which increases global game development time. In this blog we propose a different and faster approach to dialogue processing, which leverages computer-assisted creator tools. We apply this approach to one of the dialogue processing steps called trimming, where audio engineers remove superfluous parts of a recording to keep only the dialogue. Powered by machine-learning, our auto-trimmer returns, given a batch of recordings, trim recommendations and associated confidence levels to the user. It also flags uncertain queries for easier verification by the user. The tool fits in an alternate workflow, where instead of manually doing all the post-processing, the user reviews the automatic predictions with particular attention to uncertain predictions. Early results from its use in production are promising, with a ten-fold acceleration compared to manual trimming. The Voice Recording Automatic Cleaning Engine (VoRACE) aims to provide a complete dialogue post-processing automatic toolkit to sound experts and regroups various research initiatives including the auto-trimmer as a first step.

Biography

[1] Lakshminarayanan, B., Pritzel, A., Blundell, C., Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles, 2016, [https://arxiv.org/abs/1612.01474]

[2] Breiman, L. Bagging predictors. Mach Learn 24, 123–140 (1996). https://doi.org/10.1007/BF00058655